Introduction

Decisiontreeisabasicclassificationandregressionmethod.Thedecisiontreemodelhasatreestructure.Inaclassificationproblem,itrepresentstheprocessofclassifyinginstancesbasedonfeatures.Itcanbeconsideredasacollectionofif-thenrules,orasaconditionalprobabilitydistributiondefinedinfeaturespaceandclassspace.

Themainadvantageisthatthemodelisreadableandtheclassificationspeedisfast.Whenlearning,usethetrainingdatatoestablishadecisiontreemodelaccordingtotheprincipleofminimizingthelossfunction.Whenforecasting,usethedecisiontreemodeltoclassifythenewdata.

Decisiontreelearningusuallyincludes3steps:featureselection,decisiontreegenerationanddecisiontreepruning.

Decisiontreelearning

Goal:Buildadecisiontreemodelbasedonagiventrainingdataset,sothatitcanclassifyinstancescorrectly.Decisiontreelearningisessentiallytosummarizeasetofclassificationrulesfromthetrainingdataset.Theremaybemultipledecisiontreesthatcancorrectlyclassifythetrainingdata,ornone.Whenchoosingadecisiontree,youshouldchooseadecisiontreethathaslesscontradictionwiththetrainingdataandhasgoodgeneralizationability;andtheselectedconditionalprobabilitymodelshouldnotonlyhaveagoodfittothetrainingdata,butalsototheunknowndataTherearegoodpredictions.

Lossfunction:usuallyaregularizedmaximumlikelihoodfunction

Strategy:minimizetheobjectivefunctionwiththelossfunction

BecauseselectingtheoptimaldecisiontreefromallpossibledecisiontreesisanNP-completeproblem,inrealitydecisiontreelearningusuallyusesheuristicmethodstoapproximatethisoptimizationproblem,andtheresultisThedecisiontreeissub-optimal.

Thealgorithmofdecisiontreelearningisusuallyarecursivelyselecttheoptimalfeature,andsegmentthetrainingdataaccordingtothefeature,sothateachsubThedatasethasabestclassificationprocess.Includingfeatureselection,decisiontreegenerationanddecisiontreepruningprocess.

Pruning:

Purpose:Tomakethetreesimpler,sothatithasbettergeneralizationability.

Steps:Removetheleafnodesthataretoosubdivided,makethemfallbacktotheparentnode,orevenhighernodes,andthenchangetheparentnodeorhighernodetoanewleafNode.

Thegenerationofthedecisiontreecorrespondstothelocalselectionofthemodel,andthepruningofthedecisiontreecorrespondstotheglobalselectionofthemodel.Thegenerationofthedecisiontreeonlyconsidersthelocaloptimum,andthepruningofthedecisiontreeconsiderstheglobaloptimum.

Featureselection:

Ifthenumberoffeaturesislarge,selectthefeaturesatthebeginningofthedecisiontreelearning,leavingonlyFeaturesthathavesufficientclassificationabilityfortrainingdata.(Forexample,thenameisnotselectedasafeature)

Typicalalgorithm

DecisiontreeTypicalalgorithmsareID3,C4.5,CART,etc.

Theinternationalauthoritativeacademicorganization,ICDM(theIEEEInternationalConferenceonDataMining)inDecember2006selectedthetoptenclassicalgorithmsinthefieldofdatamining,C4.5algorithmrankingFirst.C4.5algorithmisaclassificationdecisiontreealgorithminmachinelearningalgorithms,anditscorealgorithmisID3algorithm.TheclassificationrulesgeneratedbytheC4.5algorithmareeasytounderstandandhaveahighaccuracyrate.However,intheprocessofconstructingthetree,thedatasetneedstobescannedandsortedmultipletimes,whichwillleadtotheinefficiencyofthealgorithminpracticalapplications.

Theadvantagesofthedecisiontreealgorithmareasfollows:

(1)Highclassificationaccuracy;

(2)Thegeneratedmodelissimple;

(3)Ithasgoodrobustnesstonoisedata.

Therefore,itisoneofthemostwidelyusedinductivereasoningalgorithmsatpresent,andithasreceivedextensiveattentionfromresearchersindatamining.

Basicidea

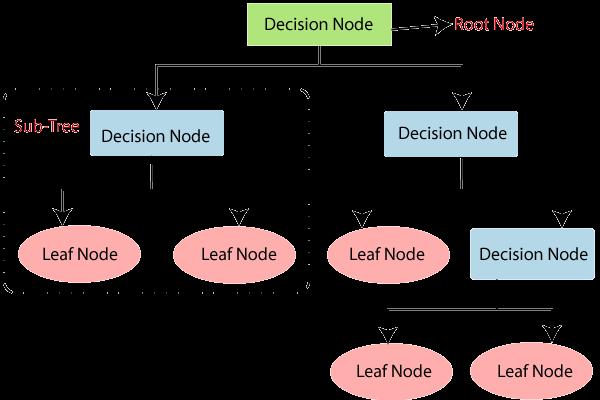

1)Thetreestartswithasinglenoderepresentingthetrainingsample.

2)Ifthesamplesareallinthesamecategory.Thenthenodebecomesaleafandismarkedwiththistype.

3)Otherwise,thealgorithmselectstheattributewiththemostclassificationabilityasthecurrentnodeofthedecisiontree.

4)Accordingtothedifferentvaluesoftheattributesofthecurrentdecision-makingnodes,thetrainingsampledatasettlIisdividedintoseveralsubsets.Eachvalueformsabranch,andseveralvaluesformseveralsub-sets.branch.Evenforasubsetobtainedinthepreviousstep,repeatthepreviousstepsandpass4'Itoformadecisiontreeoneachdividedsample.Onceanattributeappearsonanode,thereisnoneedtoconsideritinanydescendantsofthatnode.

5)Therecursivedivisionsteponlystopswhenoneofthefollowingconditionsistrue:

①Allsamplesatagivennodebelongtothesamecategory.

②Therearenoremainingattributesthatcanbeusedtofurtherdividethesample.underthesecircumstances.Usemajorityvotingtoconvertagivennodeintoleaves,andusethecategorywiththelargestnumberoftuplesinthesampleasthecategorylabel.Atthesametime,itcanalsostorethecategorydistributionofthenodesample.

③IfIfabranchtcdoesnotsatisfytheexistingclassificationsamplesinthebranch,aleafiscreatedwiththemajorityclassofthesample.

Constructionmethod

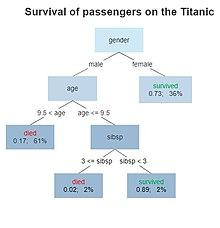

Theinputofthedecisiontreeconstructionisasetofexampleswithcategorymarks,andtheresultoftheconstructionisabinarytreeorapolytree.Theinternalnode(non-leafnode)ofabinarytreeisgenerallyexpressedasalogicaljudgment,suchasalogicaljudgmentintheformofa=aj,whereaisanattributeandajisallthevaluesoftheattribute:theedgeofthetreeisthebranchresultofthelogicaljudgment.Theinternalnodeofthepolytree(ID3)isanattribute,andtheedgesareallthevaluesoftheattribute.Thereareseveraledgesifthereareseveralattributevalues.Theleafnodesofthetreeareallcategorylabels.

Duetoimproperdatarepresentation,noise,orduplicatesubtreeswhenthedecisiontreeisgenerated,theresultingdecisiontreewillbetoolarge.Therefore,simplifyingthedecisiontreeisanindispensablelink.Tofindanoptimaldecisiontree,thefollowingthreeoptimizationproblemsshouldbesolved:①Generatetheleastnumberofleafnodes;②Thedepthofeachleafnodegeneratedisthesmallest;③ThedecisiontreegeneratedhastheleastleafnodesandeachleafnodeThedepthisthesmallest.

Classificationandregressiontreemodel

Itisalsocomposedoffeatureselection,treegenerationandpruning,whichcanbeusedforbothclassificationandregression.

TheCARTalgorithmconsistsofthefollowingtwosteps

(1)Decisiontreegeneration:Generateadecisiontreebasedonthetrainingdataset,andthegenerateddecisiontreeshouldbeaslargeaspossible;

(2)Decisiontreepruning:Prunethegeneratedtreewiththeverificationdatasetandselecttheoptimalsubtree.Atthistime,theminimumlossfunctionisusedasthecriterionforpruning.